In the realm of artificial intelligence (AI), the choice of hardware can make or break your journey. As organizations and researchers dive deeper into AI training and inference, they face a critical decision due to supply chain constraints and wait lists for the highly acclaimed Nvidia H100 GPU. Should teams try to leverage consumer solutions such as AMD Radeon or Nvidia GeForce? Let’s explore why AMD’s Radeon solutions are emerging as the go-to choice for AI enthusiasts, while Nvidia’s GeForce GPUs come with unexpected caveats.

The Peer-to-Peer Conundrum

AMD Radeon: The Peer-to-Peer Champion

AMD’s Radeon graphics cards have quietly revolutionized the AI landscape. One standout feature is their robust support for peer-to-peer (P2P) interconnects across multiple GPUs. P2P communication allows GPUs to directly exchange data without involving the CPU, significantly enhancing performance in multi-GPU setups.

Why does P2P matter? Imagine training a deep neural network on a colossal dataset. With P2P, GPUs can share intermediate results seamlessly, reducing latency and boosting overall throughput. Whether you’re tackling image recognition, natural language processing, or generative models, P2P accelerates convergence.

Nvidia GeForce: The EULA Roadblock

Now, let’s shift our gaze to Nvidia’s GeForce GPUs. While they excel in gaming and content creation, their EULA (End-User License Agreement) throws a curveball. Nvidia explicitly prohibits data center use of GeForce and Titan GPUs. Yes, you read that right—no data center deployment allowed1.

Why this restriction? Nvidia argues that GeForce and Titan GPUs weren’t designed for 24×7 data center operations. Fair enough. But here’s the catch: Nvidia’s alternative recommendation is their Tesla GPU line, which comes with a hefty price tag. Tesla GPUs are purpose-built for data centers, but they’ll cost you significantly more upfront.

The Cost Barrier and Barriers to Entry

AMD Radeon: Cost-Effective and Accessible

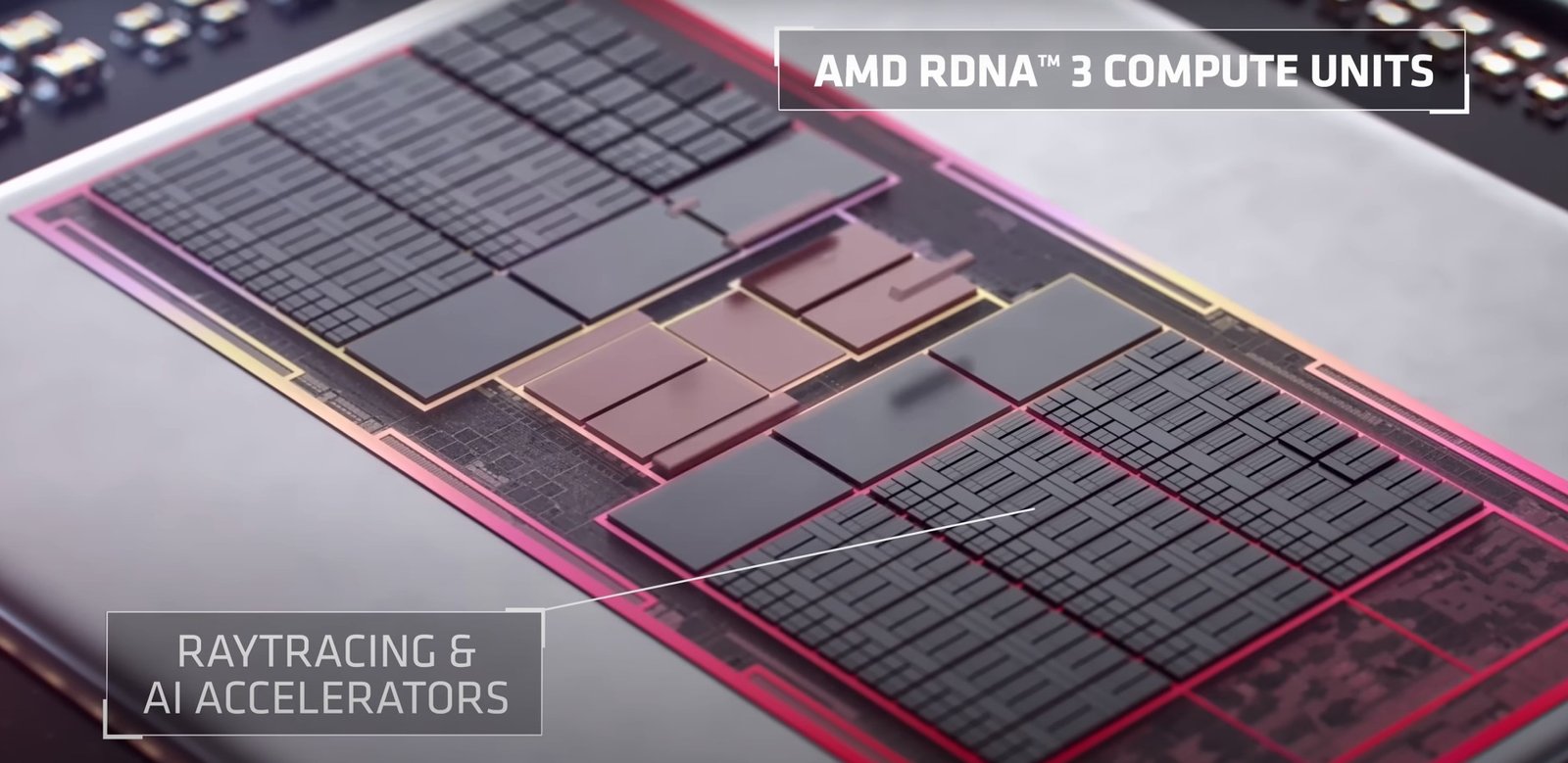

AMD’s Radeon solutions offer a refreshing alternative. They combine affordability with performance. Radeon GPUs, including the latest RDNA 3 architecture, deliver impressive AI acceleration. Whether you’re enhancing content creation workflows or developing machine learning models, Radeon cards provide bang for your buck.

The Radeon RX 7000 Series, powered by RDNA 3, boasts dedicated AI hardware, ample VRAM, and compatibility with popular ML frameworks. Researchers and hobbyists can harness this power locally, without breaking the bank. AMD’s commitment to open software platforms like ROCm further democratizes AI development.

Nvidia GeForce: A Costly Dilemma

Now, let’s revisit Nvidia. Suppose you ignore the EULA and attempt to deploy consumer-grade GeForce GPUs in your data center. You’ll hit a wall. Nvidia’s drivers will likely detect the breach, and you’ll be left with unsupported hardware. The result? Higher costs, legal risks, and operational headaches.

Tesla GPUs, while robust, come at a premium. Nvidia’s pricing model assumes enterprise budgets, leaving smaller players stranded. The barriers to entry are real: startups, research labs, and educational institutions face an uphill battle. The promise of AI democratization fades when the gatekeepers demand a king’s ransom.

The Verdict: AMD Radeon Wins Hearts for Private AI Projects

In the battle of AMD Radeon vs. Nvidia GeForce, AMD emerges as the people’s champion. Their commitment to P2P interconnects, cost-effectiveness, and openness makes Radeon GPUs an attractive choice for AI practitioners. Nvidia’s Tesla GPUs remain powerful but come with strings attached.

As AI continues its ascent, remember this: innovation thrives when barriers fall. AMD’s Radeon solutions pave the way for a more inclusive AI ecosystem. So, whether you’re training neural networks or exploring generative art, consider the Radeon route. After all, AI should be about breaking boundaries, not breaking the bank.

Choose wisely, fellow AI adventurers. The future awaits, and Radeon is your trusty companion.

Leave a Reply